Errors in the health care industry are at an unacceptably high level. Errors in health care are a leading cause of death and injury.

The Australian public is seemingly helpless as no one in power knows what to do. The issue is not getting the attention it should from the Prime Minister and the federal, state and territory Ministers for Health. Additionally, the knowledge that has been used in other industries to improve safety is rarely applied in health care. Although more needs to be learnt, there are actions that can be taken today to improve safety in health care. Medical products can be designed to be safer in use, jobs can be designed to minimize the likelihood of errors, and much can be done to reduce the complexity of care processes. [1]

Health care is decades behind other industries in terms of creating safer systems

Much of modern safety thinking grew out of military aviation. Until World War II, crashes were viewed primarily as individually caused and safety meant motivating people to “be safe”. During the war, generals lost aircraft and pilots in stateside operations and came to realise that planning for safety was as important to the success of a mission as combat planning. [2]

Building on the successful experience and knowledge of military aviation, civilian aviation takes a comprehensive approach to safety, with programs aimed at setting and enforcing standards, crash investigation, incident reporting, and research for continuous improvement. [3]

Pilots and doctors operate in complex environments where teams interact with technology

In both domains, risk varies from low to high with threats coming from a variety of sources in the environment. Safety is paramount for both professions, but cost issues can influence the commitment of resources for safety efforts. Aircraft crashes are infrequent, highly visible, and often involve massive loss of life (in one go), given world-wide publicity, resulting in exhaustive investigation into causal factors, public reports, and remedial action. Research by NASA into aviation incidents has found that 70% involve human error. [4]

In contrast, medical errors happen to individual patients and seldom receive national publicity. More importantly, there is no standardised method of investigation, documentation, and dissemination. The US Institute of Medicine (IOM) estimates that each year between 44,000 and 98,000 people die as a result of medical errors (compared to Australia a minimum of 18,000 people dying each year from medical errors, with a population of 19 million, Australia’s health system safety record is cause for justifiable alarm). When error is suspected, litigation and new regulations are threats in both medicine and aviation. [5]

- In aviation crashes are usually highly visible.

- As a result aviation has developed standardised methods of investigating, documenting, and disseminating errors and their lessons.

- Although operating theatres are not cockpits, medicine could learn from aviation.

- Observation of flights in operation has identified failures of compliance, communication, procedures, proficiency, and decision making in contributing to errors.

- Surveys in operating theatres have confirmed that pilots and doctors have common interpersonal problem areas and similarities in professional culture.

- Accepting the inevitability of error and the importance of reliable data on error and its management will allow systematic efforts to reduce the frequency and severity of adverse events. [6]

The aviation industry has drawn on many facets of crashes to reduce them. One initiative MEAG believes worthy of establishing is a “confidential hospital incident reporting system” for the anonymous reporting of incidents in Australian hospitals. Clinicians report incidents to us. They are doing so because they are not being listened to in the hospitals where they work and hesitant to speak up for obvious career reasons. The need for an anonymous reporting system is a vital component for making hospital systems safer for patients.

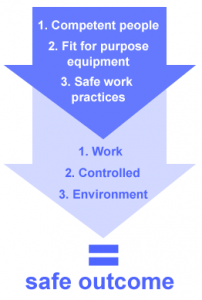

Safety needs 3 basic components:

If hospitals and doctors continue to hide their error reduction efforts from the public, they cannot expect our trust. One definition of insanity is doing the same thing over and over again and expecting a different outcome.

Time to do something different.